No products in the cart.

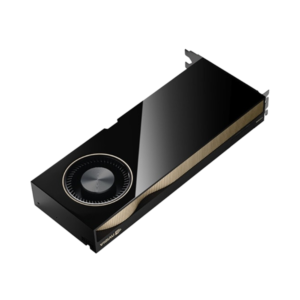

NVIDIA Tesla H100 Tensor Core GPU

The NVIDIA Tesla H100 is a cutting-edge GPU designed to accelerate the most demanding AI, machine learning, and high-performance computing workloads. Built on the Hopper architecture, it delivers exceptional performance for training large AI models, deep learning inference, and complex simulations.

Optimized for next-gen AI applications, the H100 enables faster model development and more efficient scaling across multi-GPU environments. Perfect for data centers and high-performance computing, the Tesla H100 provides the power and efficiency needed for breakthrough innovations in AI, healthcare, and scientific research.

Specifications:

GPU Architecture: NVIDIA Hopper

CUDA Cores: 14,592

Tensor Cores: 456 (Fourth-generation Tensor Cores)

GPU Memory: 80 GB

Memory Type: HBM3 (High Bandwidth Memory)

Memory Bandwidth: 3000 GB/s

Compute Capability FP64 : 60 TFLOPS (Double Precision)

Compute Capability FP32 : 120 TFLOPS (Single Precision)

Tensor Performance (TF32): 240 TFLOPS

Tensor Performance (FP16): 480 TFLOPS

Tensor Performance (INT8): 960 TOPS

Tensor Performance (INT4): 1,920 TOPS

NVLink Support: Yes (For multi-GPU configurations)

Form Factor: PCIe Gen 5, SXM

Thermal Design Power (TDP): PCIe 400W / SXM 700W

Display Outputs: None (designed for data center and server use)

Target Applications: AI model training, deep learning inference, high-performance computing (HPC)