No products in the cart.

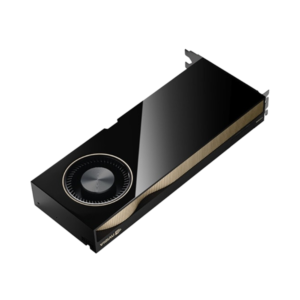

NVIDIA Tesla A100 Tensor Core GPU

The NVIDIA Tesla A100 is a state-of-the-art GPU designed to accelerate AI, deep learning, and high-performance computing (HPC) workloads. Built on NVIDIA’s Ampere architecture, the A100 delivers exceptional performance and scalability, enabling faster and more efficient model training, data analysis, and scientific computing.

Ideal for research institutions, data centers, and enterprises, the A100 empowers teams to tackle complex AI tasks, run large-scale simulations, and accelerate innovation across various industries. Whether for AI model development, high-throughput computing, or large-scale inference, the Tesla A100 is engineered to help organizations achieve breakthrough results and drive the future of AI.

Specifications:

GPU Architecture: NVIDIA Ampere

CUDA Cores: 6,912

Tensor Cores: 432 (Third-generation Tensor Cores)

GPU Memory: 80 GB

Memory Type: HBM2

Memory Bandwidth: 1555 GB/s

Compute Capability FP64 : 9.7 TFLOPS (Double Precision)

Compute Capability FP32 : 19.5 TFLOPS (Single Precision)

Tensor Performance (FP16/TF32): 312 TFLOPS

Tensor Performance (INT8): 624 TOPS

Tensor Performance (INT4): 1,248 TOPS

NVLink: 3rd Generation NVLink (supports up to 2 GPUs per link)

PCIe Gen 4: Yes

Form Factor: PCIe 4.0

Thermal Design Power (TDP): 400W

Interconnect: NVIDIA NVLink, PCIe Gen 4

Supported Software: NVIDIA CUDA, cuDNN, TensorRT, Deep Learning SDK, HPC Libraries

Target Use Cases: AI Model Training, Deep Learning, Data Analytics, Scientific Computing, High-Performance Computing (HPC)