No products in the cart.

India to launch generative AI model in 2025 amid DeepSeek frenzy

India’s Ambitious Plans to Launch Generative AI Model by 2025

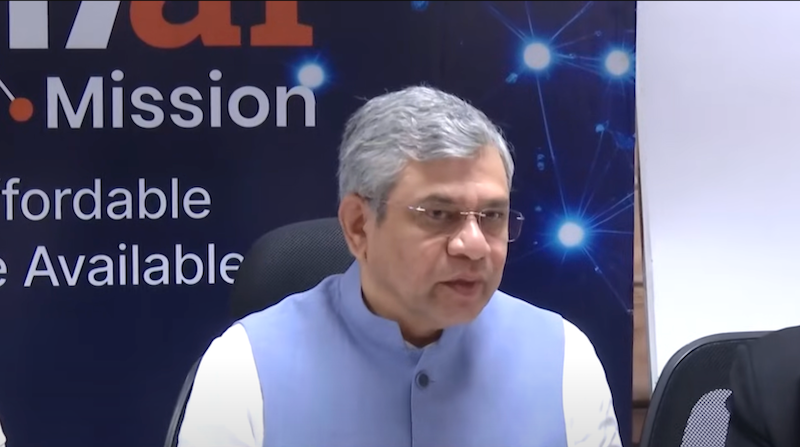

Union IT Minister Ashwini Vaishnaw recently announced that India is set to launch its own generative AI model by 2025, marking a significant step into the global AI race. This initiative comes as the country aims to harness the potential of artificial intelligence to address local needs and compete globally. The move is backed by India’s strategic investments in AI infrastructure and hardware, including the acquisition of 18,693 GPUs, such as 12,896 Nvidia H100s, which are among the most advanced for AI tasks.

India’s Commitment to AI Development and Infrastructure

India is also targeting $20 billion in foreign investment in data centers over the next three years, underlining the country’s commitment to building the necessary infrastructure for AI development. The new generative AI model will be uniquely designed to cater to India’s diverse languages and cultures, allowing it to serve the needs of the country’s vast and varied population. This homegrown AI solution will help India compete with the leading global models while addressing local challenges more effectively.

“We believe that at least six major developers will be capable of developing AI models within the next six to eight months, with a more optimistic estimate of four to six months,” Vaishnaw stated.

The AI Landscape and the Rise of Competitive AI Models

India’s announcement comes at a time when the AI race is intensifying, with significant developments like the release of DeepSeek R1. This open-source AI model has reportedly matched the performance of leading models from OpenAI, but at a much lower cost, challenging conventional notions about the scalability and hardware requirements for AI.

While the U.S. has long been the leader in AI innovation, countries like China and India are emerging as formidable competitors, using their access to large populations and cost-effective production strategies to make rapid advancements. These countries are positioning themselves to bypass the heavy infrastructure traditionally required for AI development.

US Export Controls on AI Chips and Geopolitical Implications

The U.S. is considering tightening export controls on critical AI technologies, particularly high-performance chips from Nvidia. These chips are essential for the development of advanced AI models, and tighter restrictions on their export could hinder the ability of U.S. companies to compete on the global stage. Currently, the U.S. has imposed sanctions on Nvidia’s sales to China, including embargoes on the H100 processor and other AI components. Further restrictions could exacerbate tensions between nations as they compete for technological supremacy in AI.

The Geopolitical Contest for AI Dominance

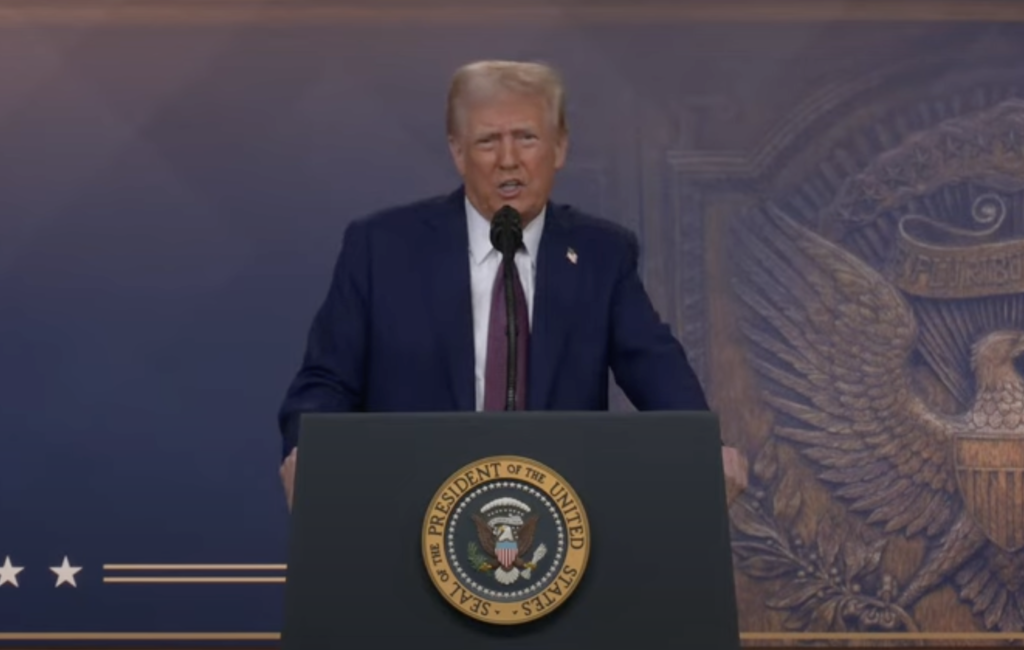

President Donald Trump’s vow to make the U.S. the AI capital of the world is part of a broader effort to maintain American dominance in the technology sector. The launch of Project Stargate, a $500 billion initiative led by OpenAI, Oracle, and SoftBank, aims to build a robust AI infrastructure in the U.S. by enhancing data centers, high-performance computing capabilities, and semiconductor manufacturing.

However, some experts argue that tighter export controls could backfire by stifling innovation and reducing the global competitiveness of U.S. firms. With smaller, agile competitors like India and China rapidly advancing, the U.S. may face challenges in maintaining its leadership in AI development.

The Future of AI and Learning Technologies

AI technology is evolving at an unprecedented pace, offering opportunities for companies and individuals to embrace advanced learning models. As India and other nations make significant strides in AI, the need for specialized AI learning tools becomes more critical than ever. Whether you’re interested in building AI models, exploring machine learning, or developing AI-based applications, staying updated on the latest trends and technologies is essential.

AI Model Training and the Importance of High-Performance GPUs

Training AI models requires significant computational power, and utilizing the right hardware is crucial for efficient learning and deployment. High-performance GPUs, such as those used in generative AI development, enable faster processing and better scalability for complex algorithms. These powerful tools accelerate training times, allowing businesses and researchers to build more accurate, robust AI models. To maximize the potential of AI, it’s essential to invest in top-tier GPUs tailored to AI workloads. Explore our range of AI GPU solutions to enhance your AI model training process and achieve cutting-edge results.