No products in the cart.

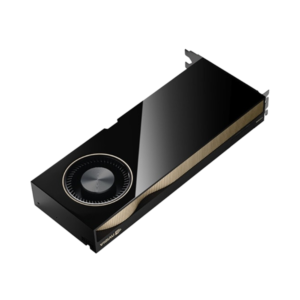

NVIDIA Tesla H200 Tensor Core GPU

The NVIDIA Tesla H200 is a groundbreaking GPU designed to drive the next generation of AI, deep learning, and high-performance computing (HPC) workloads. Powered by the latest Hopper architecture, the H200 offers unprecedented performance and efficiency, enabling rapid model training, high-throughput data processing, and advanced scientific simulations.

Tailored for research institutions, data centers, and enterprises, the Tesla H200 is built to handle the most demanding AI tasks, from large-scale model development to complex neural network training and real-time inference. With advanced Tensor Core technology and extensive memory bandwidth, the H200 accelerates deep learning applications, helping teams deliver innovative AI solutions at scale.

Whether used for AI-driven breakthroughs in healthcare, autonomous systems, or natural language processing, the Tesla H200 empowers organizations to accelerate their research, improve productivity, and achieve transformative results. Designed for scalability, efficiency, and speed, the Tesla H200 is the ideal GPU for the AI-focused enterprise looking to stay at the forefront of technological innovation.

Specifications:

GPU Architecture: NVIDIA Hopper

CUDA Cores: 16,896

GPU Memory: 141 GB

Memory Type: HBM3e

Memory Bandwidth: 4.8 TB/s

FP64 Tensor Core : 60 TFLOPS

TF32 Tensor Core²: 835 TFLOPS

BFLOAT16 Tensor Core²: 1,671 TFLOPS

FP16 Tensor Core²: 1,671 TFLOPS

FP8 Tensor Core²: 3,341 TFLOPS

INT8 Tensor Core²: 3,341 TFLOPS

NVLink:2- or 4-way NVIDIA NVLink bridge: 900GB/s per GPU

PCIe Gen5: 128GB/s

Form Factor: PCIe 5.0 Dual-slot air-cooled

Thermal Design Power (TDP): 600W

NVIDIA AI Enterprise: Included

Interconnect: NVIDIA NVLink, PCIe Gen 5

Supported Software: NVIDIA CUDA, cuDNN, TensorRT, Deep Learning SDK, HPC Libraries

Target Use Cases: AI Model Training, Deep Learning, Data Analytics, Scientific Computing, High-Performance Computing (HPC)