Let’s Stay in Touch!

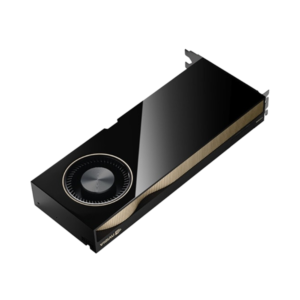

Nvidia Tesla V100 32GB

$6,500.00

Condition: Brand New

Architecture: Volta

Processing Time: 2 – 4 days

MOQ: 1

Saving your money for next purchases

Delivery – when you want and anywhere

A huge selection of best products

NVIDIA Tesla H100 Tensor Core GPU

The NVIDIA Tesla H100 is a cutting-edge GPU designed to accelerate the most demanding AI, machine learning, and high-performance computing workloads. Built on the Hopper architecture, it delivers exceptional performance for training large AI models, deep learning inference, and complex simulations.

Optimized for next-gen AI applications, the H100 enables faster model development and more efficient scaling across multi-GPU environments. Perfect for data centers and high-performance computing, the Tesla H100 provides the power and efficiency needed for breakthrough innovations in AI, healthcare, and scientific research.

Specifications:

GPU Architecture: NVIDIA Volta

CUDA Cores: 5,120

Tensor Cores: 640 (for deep learning acceleration)

GPU Memory: 32 GB HBM2 (High Bandwidth Memory)

Memory Bandwidth: 900 GB/s

Compute Capability FP64 : 7.8 TFLOPS (Double Precision)

Compute Capability FP32 : 15.7 TFLOPS (Single Precision)

Tensor Performance (FP16): 125 TFLOPS

Tensor Performance (INT8): 250 TOPS

NVLink Support: Yes (for multi-GPU scaling and high-bandwidth communication between GPUs)

Form Factor: PCIe Gen 3

Thermal Design Power (TDP): 250W

Display Outputs: None (designed for use in data centers or high-performance systems)

Target Applications: AI model training, deep learning, scientific simulations, high-performance computing (HPC)

$32,000.00

$6,250.00